In web applications, poor performance can often be traced to how the database is used. The database itself may be excellent, but misuses like excessive or poorly-written queries and missing indexes can really bog an application down.

I recently rewrote some reporting code to take better advantage of what the database can do. At the cost of a little database magic, I got simpler Elixir code and massively improved performance.

Since the actual project is not public, I’ll explain this approach using a fictional example. Note: whenever I mention a database feature, I’m referring to PostgreSQL, but a similar concept may be available in your database of choice.

The Setup

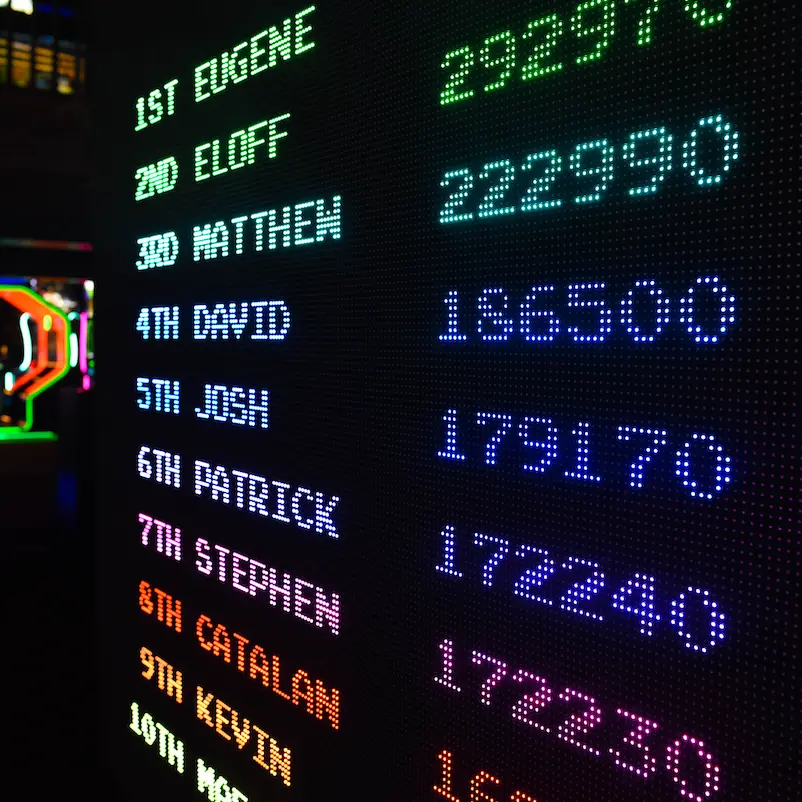

Suppose we run an online game in the style of Civilization.

Various events, such as battles and discoveries, can affect a player’s score.

These events are stored in different tables.

There are some complex relationships in this game–a single event can affect multiple players, and a single player can be involved in multiple games.

To calculate a player’s current score, we need to take into account the entire history of all their games. This query requires a lot of joins and is fairly expensive.

If we want to show top scores across all players, how can we do it?

Option 1: Just Run The Query

One approach is to compose and run the entire query on every page load.

This has two downsides:

- It may require a lot of application code.

- More importantly, it gets a little more expensive with each game event added to the history.

Option 2: Denormalize

Another approach is to denormalize the data.

For instance, we could store all game events in a single events table which includes the game_name and has many columns, each of which pertains to some events and not others.

For example, battle_type applies only to some events, discovery_type to others, etc.

This might make our “top scores” query cheaper, since it would require fewer joins. But it has major downsides.

- It’s inefficient for storage space.

- It doesn’t let you use

NOT NULLto guarantee things like “every discovery has a category”. - It can make other queries difficult.

- Most importantly, it can lead to consistency issues. For example, if the name of a game is stored on every

eventsrow, changing that name without updating all those rows will create confusion.

Option 3: Create A View

A database view is like a table, but it’s defined as a query.

If our schema were as simple as users and events, we could define a view like this:

CREATE VIEW scores

AS

SELECT

users.id AS user_id

sum(event.points) AS score

FROM users

JOIN events ON user.id = event.user_id

GROUP BY users.id;

This gives us a denormalized representation, but the underlying, canonical data would still be normalized.

Creating a database view would make our queries simpler to write–as simple as SELECT score FROM scores WHERE user_id = 5.

But in our example, the underlying data isn’t that simple. Under the hood, a view is simply running the original query, which means its performance is the same. So this doesn’t solve our problem.

(Temporary tables and Common Table Expressions are variations on this idea with some nuances, such as temporary tables supporting indexes and being tied to the database transaction or session.)

Option 4: Create A Materialized View

Materialized views are defined like views, but the key difference is that they cache their data.

In other words, the underlying query is run once and the results are stored. At query time, we don’t have to repeat that work; we just query the cached data. A materialized view can even have indexes.

If we take our previous CREATE VIEW ... statement and instead CREATE MATERIALIZED VIEW ..., we’ll be able to run SELECT score FROM scores WHERE user_id = 5 without repeating the underlying query; we’ll just be working with its cached results.

This is great for query speed, but it has a couple of downsides.

- Freshness: A materialized view does not get updated as new data comes in. We have to explicitly call

REFRESHwhen we want it to rebuild itself with the latest data. And we have to decide when to do that–on a timer, using a trigger, or with some other strategy. - Efficiency: When we refresh it, the materialized view has to start from scratch, calculating the whole history of every player’s score, even if some of them have not changed. Refreshing the table might be fast today, but as our data grows, it will take longer and longer.

We can do better.

Option 5: Roll Your Own Materialized View

The core concept of a materialized view is this: a table whose data is calculated by querying other tables.

What we’d like to avoid is a complete REFRESH from scratch.

To roll our own materialized view, we need:

- A plain table - in this case,

scores- where we’ll store query results. (This table can have whatever indexes we want.) - The query to calculate a single row (one player’s current score)

- Database triggers to update a single row in

scoreswhen any game event is added

This is the crucial part: when a game event occurs for one player, we will recalculate that one player’s score and update that one player’s row in the scores table.

PostgreSQL triggers let us say things like “after a row is inserted into battles, update that player’s scores row.”

The update is specified as a database function, which can calculate the updated scores row and upsert it.

Like a normal materialized view, this scores table will be speedy to query.

And the trigger-based approach addresses the downsides of the materialized view:

- Freshness: The

scoresdata will always be fresh because the trigger to update it will run in the same database transaction as the triggering event. In other words, when the transaction to updatebattlesis complete,scoreswill be updated, too. - Efficiency: When the trigger fires, we’ll only recalculate the relevant player’s score; the other players’ rows can stay as they are.

The downside is that using a trigger means that we’re adding work at insertion time–inserting into battles becomes more expensive.

That might matter and it might not.

If it’s a problem, here are two possible solutions.

First, rather than doing the expensive “calculate and update” steps, we could have the trigger insert an “update job”, to be actually processed later by application code which watches that table.

(In Elixir, Oban could do this.)

This puts “freshness vs effort” on a continuum: the more aggressively we work that queue, the fresher the scores table will be.

Second, if calculating a single row gets slower over time–because a single player’s score is based on an ever-increasing history–we could perform some intermediate caching to speed it up.

For example, we could have a game_scores table to summarize player scores per game, then overall scores could be calculated from that.

Or we could have a table of monthly totals, so that the latest player score would be based on the latest monthly score plus any games since then.

Some Words of Caution

As you can see, database triggers can be very helpful. But they do bear a strong resemblance to callbacks in application code. Callbacks can be hard to understand, with one action triggering another in a hard-to-follow sequence. I’ve personally seen them lead to massive, expensive bugs.

Like, “we’re paying full-time employees to deal with the ongoing financial discrepancies created by our out-of-control callback code” bugs. 😱

However, when used the way I’ve shown here, triggers are simply “the database keeping the database consistent”, as my colleague Scott Hamilton put it. We want the database to keep the normalized data, to maintain a denormalized copy for easy querying, and to ensure that those two stay in sync. Maintaining consistent data is a core responsibility for databases, and a role where they shine.

However, I would suggest a few guidelines for triggers:

- Use triggers sparingly. Prove that you have a performance need before trying to optimize.

- Chain even more sparingly. If one trigger causes another, it may be hard to see the effects of a change.

- Keep the logic simple. Database logic is harder to debug, and when things to wrong, you don’t get stack traces and error tracking.

- Think about the cost at insert/update time. Triggers are run in the same transaction as the change which triggers them, slowing it down. That may be fine or may not be; if not, maybe insert an Oban job and work it out-of-band.

- Use migrations. Maybe this goes without saying, but database functions and triggers should be defined in migrations, to be version controlled and deployed with the application code they support.

Happy coding!

Further Reading

- Materialized View Strategies Using PostgreSQL shows example code for several of these approaches. The last one I described corresponds to the “Eager Materialized View”; the post shows how to create the functions and triggers that keep the data fresh.

- PostgreSQL triggers documentation.

DockYard is a digital product consultancy specializing in user-centered web application design and development. Our collaborative team of product strategists help clients to better understand the people they serve. We use future-forward technology and design thinking to transform those insights into impactful, inclusive, and reliable web experiences. DockYard provides professional services in strategy, user experience, design, and full-stack engineering using Elixir, Ember.js, React.js, and Ruby. From idea to impact, we empower ambitious product teams to build for the future.