This is the first in a two-part series to discuss the two blocking issues that must be resolved before we can release LiveView Native v0.2.0, which is going to be dubbed “the one you can use”. You can read part two here.

Back in September, I gave a keynote presentation on LiveView Native at ElixirConf. The original intent of this presentation was supposed to be the formal “launch” of LiveView Native’s SwiftUI client. We had made a ton of progress since presenting on the proof of concept the year prior. However, in the 24 hours before my keynote I made the decision to change the nature of my keynote from a “launch” to a “status update”. This was due to my gut feeling that we weren’t quite ready yet. I didn’t feel comfortable getting on stage and selling the audience on LiveView Native (LVN) as ready, because it wasn’t. Let’s discuss why that was and what we’ve been working on to get it ready.

The first thing you’ve got to understand is that I’ve been through several technology ecosystems/communities at this point in my career. When it comes to launching a framework or a product I’ve been burned by hype and broken promises too often.

It’s true, there is some acceptance of risk using a pre-1.0 product. But at the end of the day, managing expectations and how those expectations are communicated is one of the more challenging and important responsibilities of publishing software. There are people whose jobs depend on what you’re writing. If I convinced people LVN was ready when it wasn’t, those eager to adopt early would get burned and would be unlikely to come back. Crossing the adoption chasm at that point becomes far more difficult as those early adopters are your champions for evangelizing your efforts.

SwiftUI as our first target client was chosen for the following reasons:

- Most of our target developer market is predominantly using Apple products, so they have access to the development environment, Xcode.

- SwiftUI is a composable framework and, while not strictly necessary for LVN, having a composable framework makes the mental mapping from LVN back to the original framework very easy.

- SwiftUI demos very well.

- Between the Android and the iPhone ecosystems, SwiftUI is far more mature than Jetpack Compose so we had a deeper bench of experience to leverage.

- SwiftUI had been anointed by Apple as its de facto standard for application development of the future. This means all of Apple devices can or will run SwiftUI apps and Apple is actively migrating its library ecosystem over to SwiftUI.

Two key assumptions I had when we first began this effort were that we would be able to map SwiftUI views to markup “elements” and we’d be able to style those elements with element attributes. I was 50% correct.

It’s that 50% I was wrong about that has taken us so long and resulted in the change in my keynote at ElixirConf.

To understand why, let’s look at some arbitrary SwiftUI code:

VStack {

Text("Top")

Text("Bottom")

}

These are the Text and VStack views in SwiftUI. They pretty much do what you’re likely assuming: stack views vertically and render text. In LVN we can easily represent this as:

<VStack>

<Text>Top</Text>

<Text>Bottom</Text>

</VStack>

Let’s say we want to style the text. Our original assumption was that we could simply map SwiftUI modifiers to attribute key/value pairs on the LVN elements like so:

<VStack>

<Text foreground-color="red">Top</Text>

<Text font-weight="bold">Bottom</Text>

</VStack>

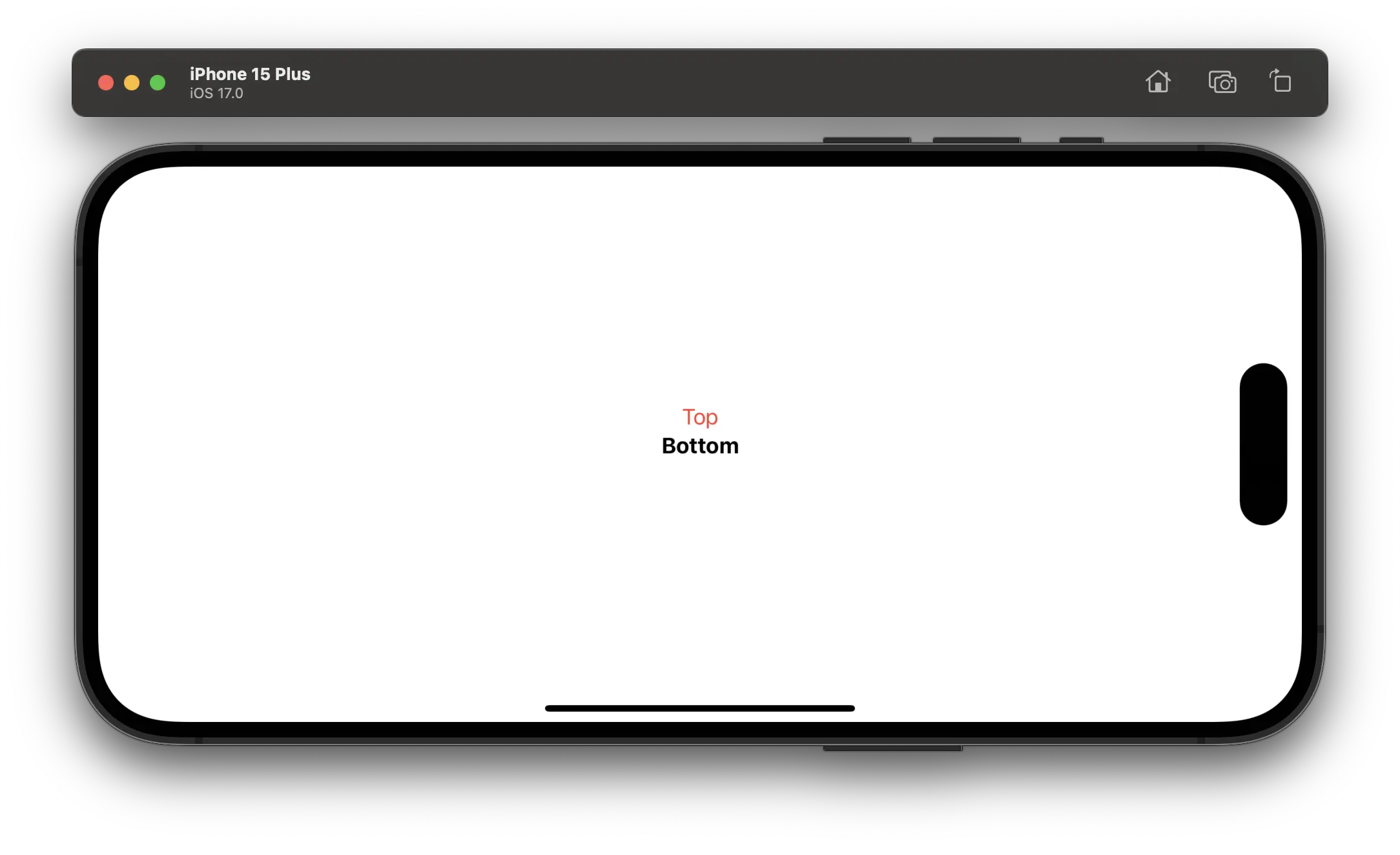

And this would be represented in SwiftUI as such:

VStack {

Text("Top")

.foregroundColor(.red)

Text("Bottom")

.fontWeight(.bold)

}

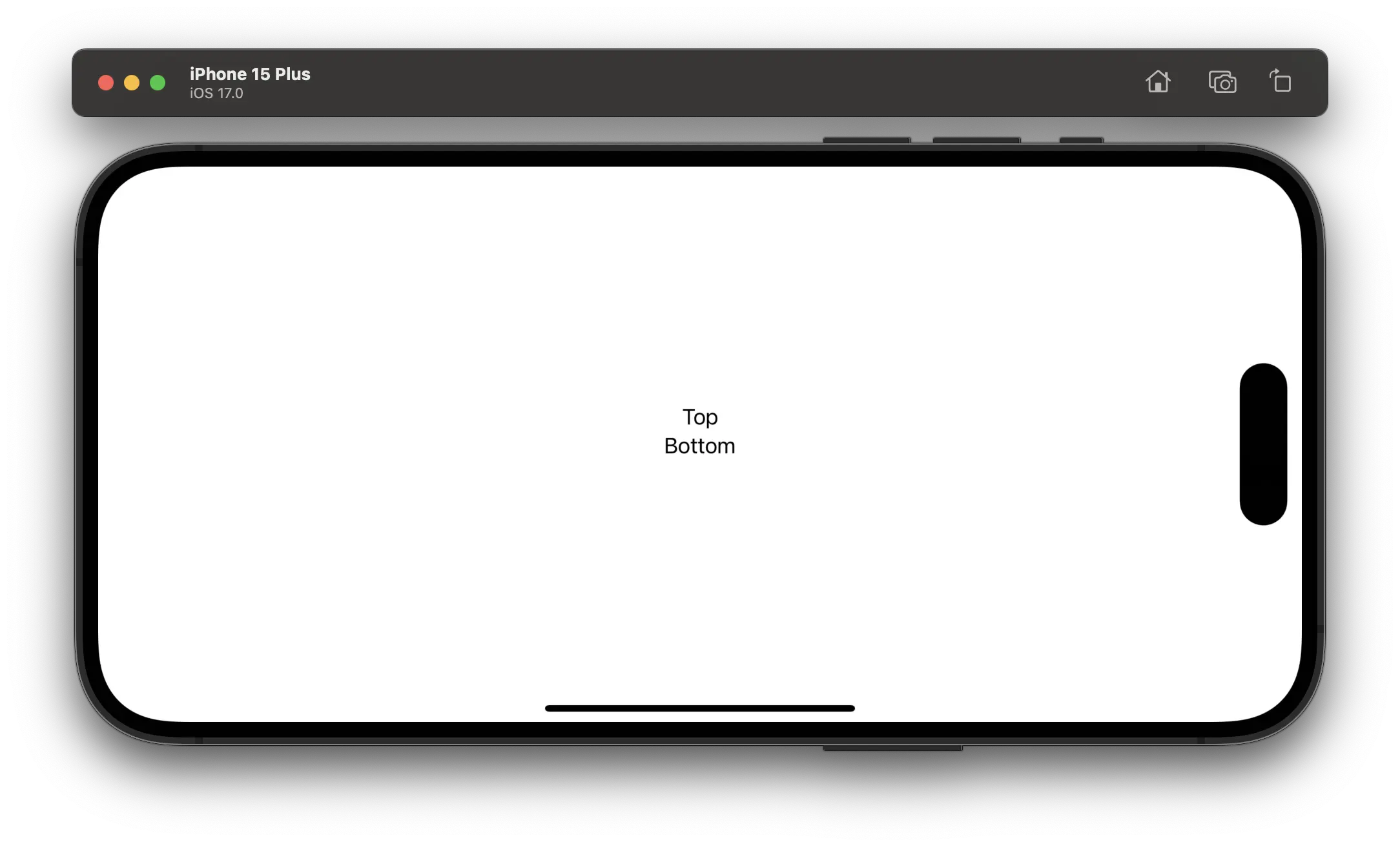

Seems pretty easy, right? Well, not so fast. You see, modifiers are not simply a flat set of rules. They can be nested infinitely and can take other views as arguments.

This would be as if CSS rules could take an HTML fragment as a value. And that HTML could have its own CSS rules, which would then take other HTML fragments as values, etc. The nested nature of modifiers means our nice and clean attribute approach was not going to work:

VStack {

Text("Top")

.foregroundColor(.red)

.background {

Rectangle()

}

Text("Bottom")

.fontWeight(.bold)

}

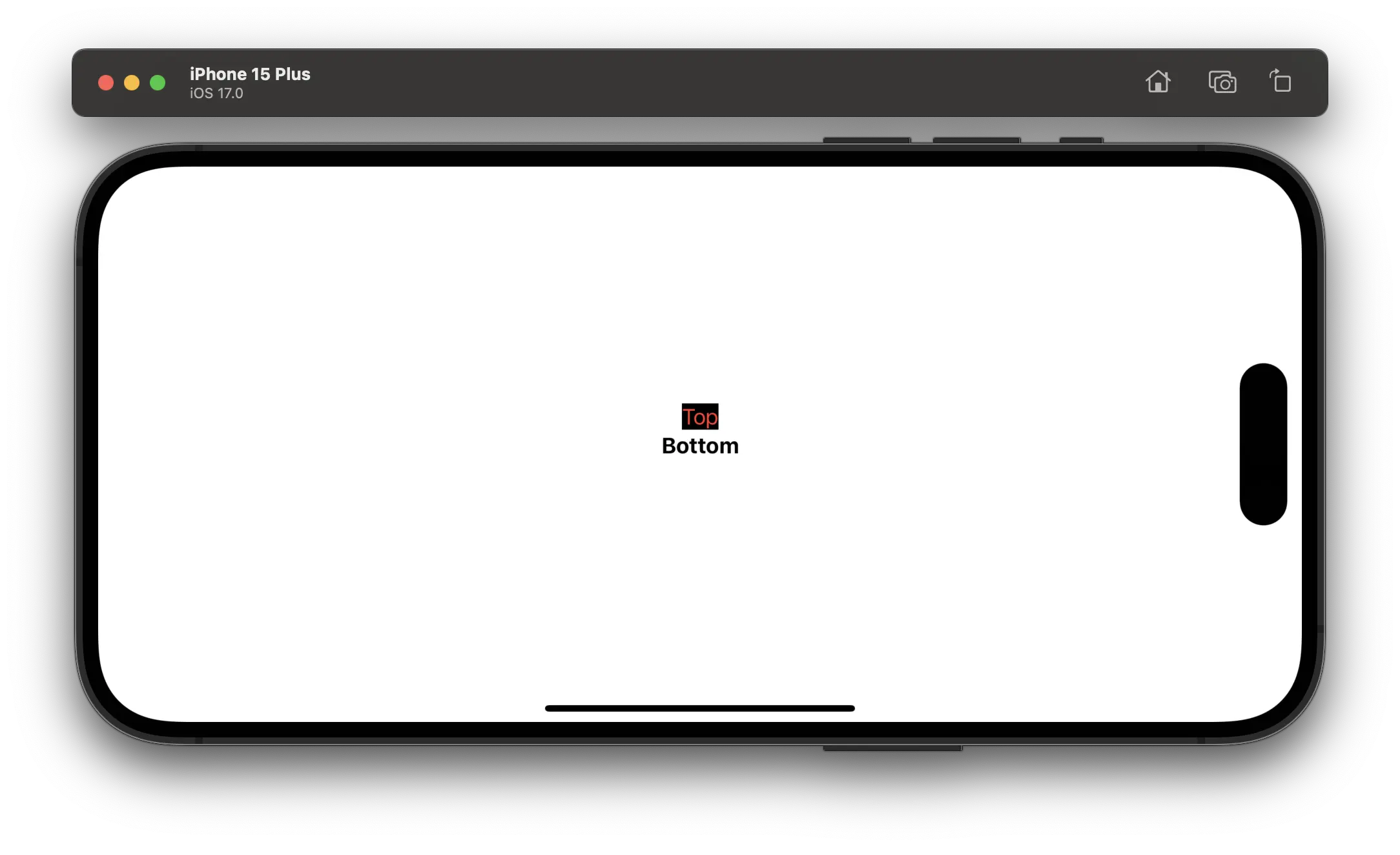

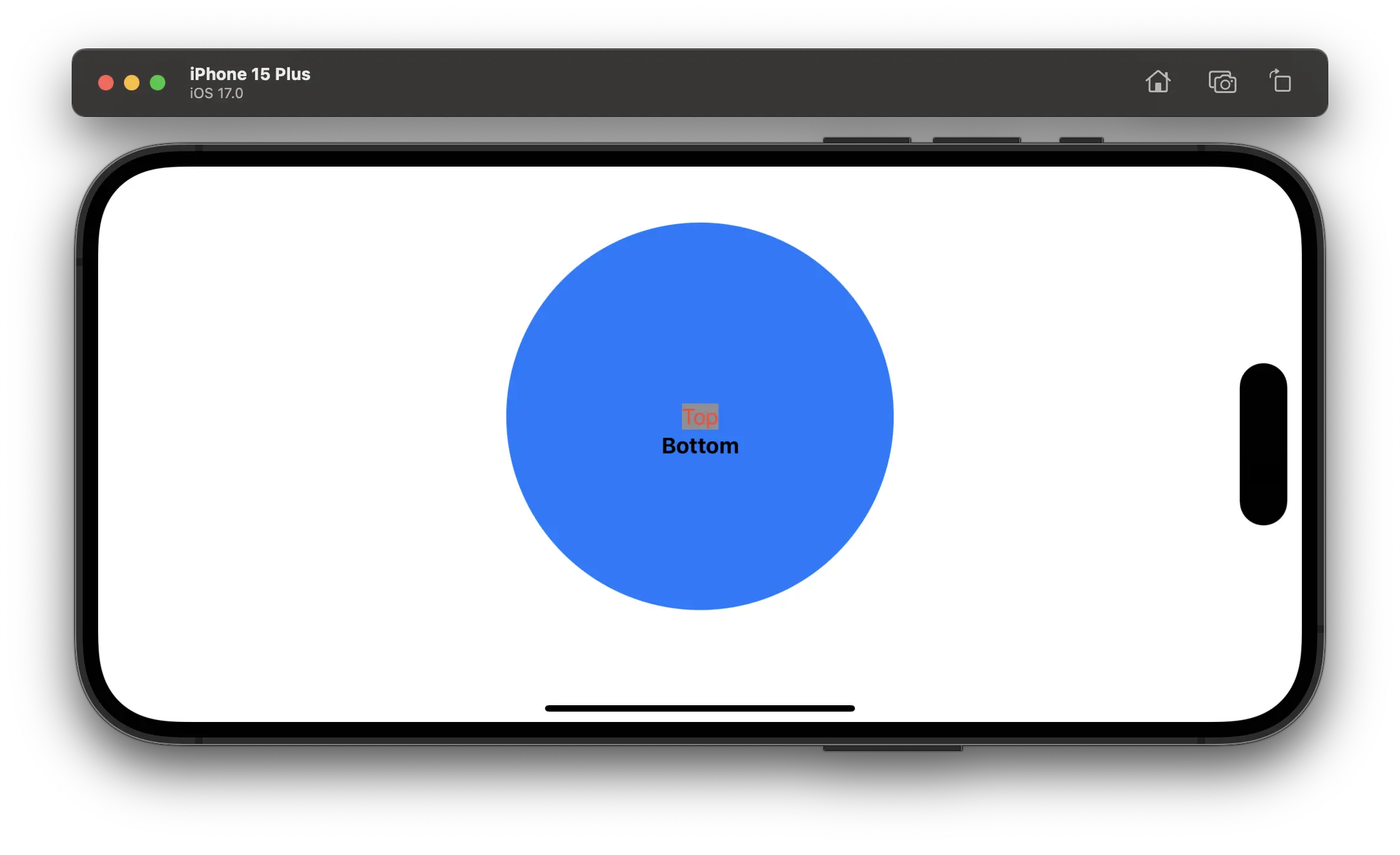

Here we’ve added a Rectangle to the background modifiers of the first Text view:

So how do we represent this in a key/value pair on the element? If you think you have a way to do it, let’s add some complexity. Because Rectangle is just a SwiftUI View, we can add modifiers to it:

VStack {

Text("Top")

.foregroundColor(.red)

.background {

Rectangle()

.fill(.gray)

}

Text("Bottom")

.fontWeight(.bold)

}

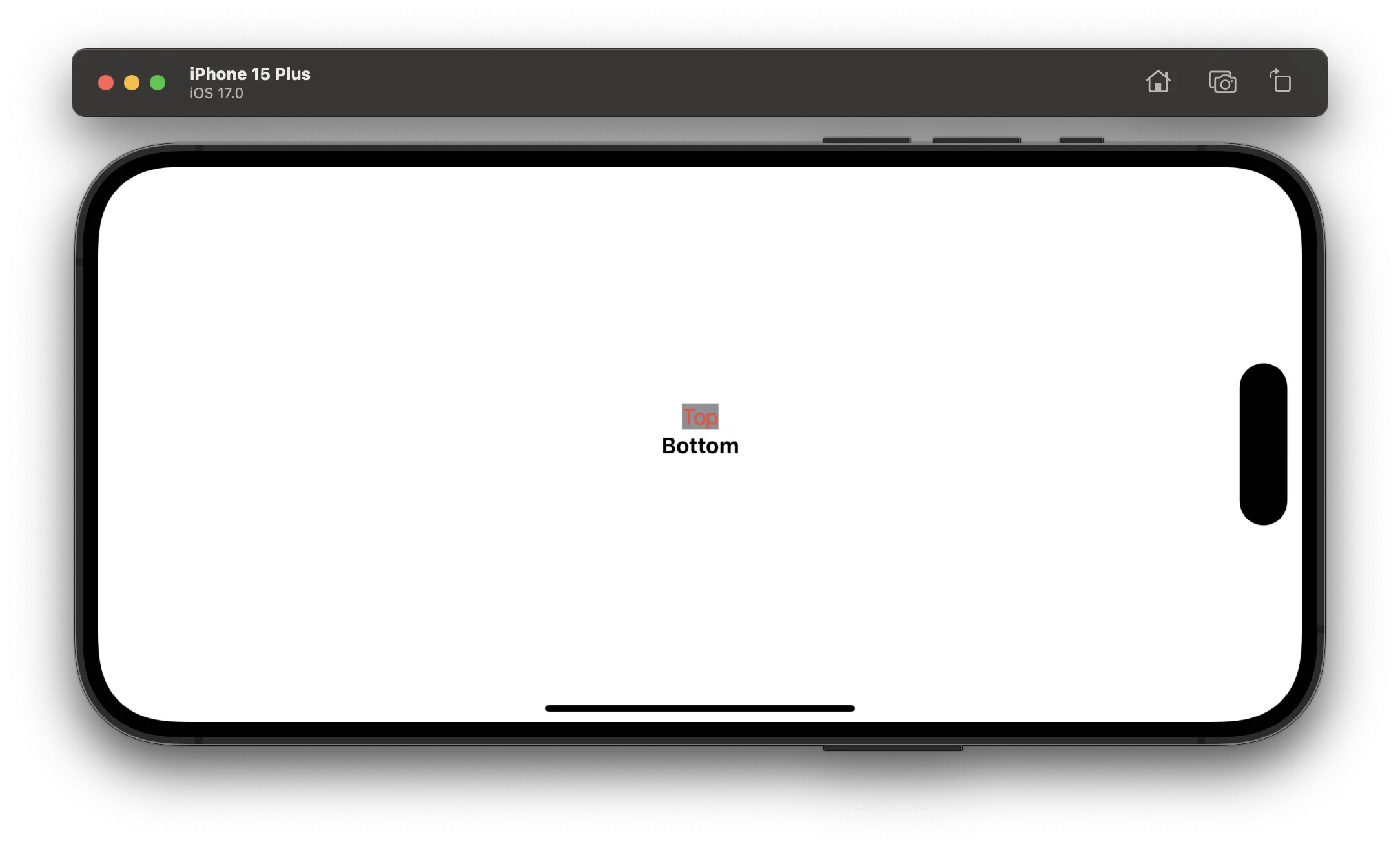

And let’s take it one step further and add a background modifier to the Rectangle that is already in the background of Text

VStack {

Text("Top")

.foregroundColor(.red)

.background {

Rectangle()

.fill(.gray)

.background {

Circle()

.fill(.blue)

.frame(width: 300, height: 300)

}

}

Text("Bottom")

.fontWeight(.bold)

}

I hope you are starting to see the problem here. We cannot represent modifiers that can be written to any depth with a one-dimensional representation. But, we “solved” this problem months before ElixirConf. Our solution at the time was to create a special attribute on each element called modifiers and serialize the modifiers that we want to apply to a given element. For the content, which is the { ... } of the modifier, where we can add Views, we were passing an identifier that we then would look up within that element’s children. Something like this:

<VStack>

<Text modifiers={foreground_color(:red) |> background(content: "child-rect")}>

Top

<Rectangle template="child-rect" modifiers={fill(:gray) |> background(content: "child-circle") }>

<Circle template="child-circle" modifiers={fill(:blue) |> frame(width: 300, height: 300)} />

</Rectangle>

</Text>

</VStack>

The modifiers are referencing Elixir functions that we wrote. It took months of work to reproduce all of the known SwiftUI modifiers as Elxir composable functions along with a level of type checking. These would compose and be serialized into JSON. On the SwiftUI client when building the ViewTree we’d grab the modifiers value, deserialize JSON once-per-view and apply those modifiers. This “worked” but what we discovered about a week prior to the conference were some very concerning performance costs.

Basically, we’d have a ton of deserialization overhead per view at runtime. This was evident in our ElixirConf Chat app which didn’t have a big chat backlog but had a very noticeable rendering latency due to this problem. The latency was not due to network as the web version rendered plenty fast.

May Matyi built on top of this what I consider the major breakthrough for us, with modclass. Now we could rewrite the above template as:

<VStack>

<Text modclass="color-red bg:child-rect">

Top

<Rectangle template="child-rect" modclass="fill-gray bg:child-circle">

<Circle template="child-circle" modclass="fill-blue frame:300:300" />

</Rectangle>

</Text>

</VStack>

This modclass API would then be backed up by a modclass/2 helper available for template rendering. Basically, something like this:

element

|> attr_value("modclass")

|> String.split(" ")

|> Enum.map(&mod_class(&1, assigns))

And we could write our own pattern-matching modclass/2 functions:

def modclass("color-" <> color, _assigns) do

foreground_color(String.to_atom(color))

end

def modclass("fill-" <> color, _assigns) do

fill(String.to_atom(color))

end

def modclass("frame:" <> dims, _assigns) do

[width, height] = String.split(dims, ":")

frame(width: width, height: height)

end

def modclass("bg:" <> name, _assigns) do

background(content: name)

end

So you can see how this new form would just expand to the previous form, we then collect and serialize the modifiers and inject that string into the modifiers attribute. This was a nice DSL that allowed us to compose modifiers and rely on Elixir’s pattern matching to build complex class names to match on. This felt right.

I originally decided we were going to roll with this and wrote my keynote around what we had. But the morning of the keynote I changed my mind. I did not feel comfortable selling the audience on something I knew wasn’t good enough yet. Our solution was very likely going to be a major API-breaking change, and getting people to start investing their time into one way of doing things that we were likely to toss didn’t feel right. So I changed this over to a status update and discussed some of what I’ve described here.

After my presentation José Valim grabbed me and had some ideas on how to improve, and we roped in Chris McCord too. Beyond the rendering issues in the SwiftUI client, José was concerned our modifier solution would have server side rendering performance problems because we cannot really take advantage of diffing, at least not in the way that LiveView itself is performant. Chris made an off-hand comment on just compiling a set of class names and storing in the client. We had briefly discussed this idea within the LVN team but dismissed it as we wanted to keep as much of the development experience in Elixir as possible, but the concept stuck with me.

After the conference, we began to iterate on different approaches to solve the rendering issue. But at the end of the day even if we made the rendering faster we were still stuck with the issue of LiveView’s diffing being impacted by our serialized modifiers stuffed into elements and we had a ton of Elixir code to support with all of the modifiers being reimplemented.

So we began to work on what is now being dubbed our “stylesheet” approach. Basically, we are pushing the deserialization on the client to a boot-time concern (LiveView boot) rather than a run-time concern. In addition, we are relying on an AST representation so we have greater control with less asset bloat. This allows us to:

- Rely on

classattribute just like HTML, which plays nice with LiveView’s diffing engine - Use pattern matching to build complex class names that are very powerful

- Have a near-identical rendering performance to conventionally built SwiftUI apps

Here is how this will look:

<VStack>

<Text class="color-red bg:child-rect">

Top

<Rectangle template="child-rect" class="fill-gray bg:child-circle">

<Circle template="child-circle" class="fill-blue frame:300:300" />

</Rectangle>

</Text>

</VStack>

The template is identical to the modclass version but now we are using class instead. We now have to write our own “stylesheet” using LiveViewNative.Stylesheet

defmodule MyApp.Sheets.SwiftUISheet do

use LiveViewNative.Stylesheet, :swiftui

~SHEET"""

"color-" <> color do

foregroundColor(to_ime(color))

end

"fill-" <> color do

fill(to_ime(color))

end

"frame:" <> dims do

frame(width: to_width(dims), height: to_height(dims))

end

"bg:" <> name, _assigns) do

background(content: name)

end

"""

end

The ~SHEET sigil is a macro that will parse the sheet and expand to actual functions. Then at compile-time of the app we parse our SwiftUI templates and extract the class names, split on whitespace and call

SwiftUISheet.compile_string(class_names)

which gives us an AST. I’ll go into a breakdown of that AST in a future post. But the AST is Elixir-readable. The stylesheet is sent alongside the on_mount function to the client.

So we’ve done away with our server-side type checking and pretty much allow any function/modifier with any set of arguments and any types to be compiled. We are going to rely on SwiftUI itself to tell us if something is wrong. Similar to HTML/CSS if there are malformed modifier names, an incorrect argument signature, or mismatched types we will ignore that modifier and continue on with the remaining ones. We do not want to crash the client because a modifier doesn’t exist or due to a type issue. The client should always render, then provide logger information informing the developer that something didn’t match and guidance on how to fix it.

There’s even more complexity here that we didn’t cover, because modifiers in SwiftUI can render UI components, even without views passed to them. And those UI components should respond to events. But, because the modifier is not a template element, how can we attach phx-change or other phx- attributes to them? This is our current solution:

~SHEET"""

"search" do

searchable(change: event("search-change", throttle: 1_000))

end

"""

This will add a special node to the AST that we can capture when rendering in the client to inform it that we need to emit a phx-change="search-change" back to the LV endpoint and throttle that to every 1,000ms.

We’re still validating this API but so far most of the pieces are falling into place and we’re likely to roll with it.

In part two I’ll discuss the other blocker for v0.2.0 which is aligning with Phoenix’s own format convention and allowing the use of layout files.